Web3 Has a Memory Problem — And We Finally Have a Fix

Web3 has a memory problem. Not in the “we forgot something” sense, but in the core architectural sense. It doesn’t have a real memory layer.

Blockchains today don’t look completely alien compared to traditional computers, but a core foundational aspect of legacy computing is still missing: A memory layer built for decentralization that will support the next iteration of the internet.

Muriel Médard is a speaker at Consensus 2025 May 14-16. Register to get your ticket here.

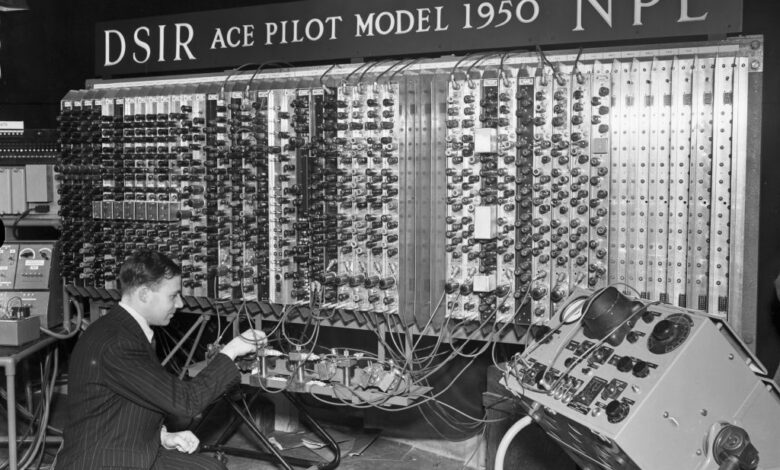

After World War II, John von Neumann laid out the architecture for modern computers. Every computer needs input and output, a CPU for control and arithmetic, and memory to store the latest version data, along with a “bus” to retrieve and update that data in the memory. Commonly known as RAM, this architecture has been the foundation of computing for decades.

At its core, Web3 is a decentralized computer — a “world computer.” At the higher layers, it’s fairly recognizable: operating systems (EVM, SVM) running on thousands of decentralized nodes, powering decentralized applications and protocols.

But, when you dig deeper, something’s missing. The memory layer essential for storing, accessing and updating short-term and long term data, doesn’t look like the memory bus or memory unit von Neumann envisioned.

Instead, it’s a mashup of different best-effort approaches to achieve this purpose, and the results are overall messy, inefficient and hard to navigate.

Here’s the problem: if we’re going to build a world computer that’s fundamentally different from the von Neumann model, there better be a really good reason to do so. As of right now, Web3’s memory layer isn’t just different, it’s convoluted and inefficient. Transactions are slow. Storage is sluggish and costly. Scaling for mass adoption with this current approach is nigh impossible. And, that’s not what decentralization was supposed to be about.

But there is another way.

A lot of people in this space are trying their best to work around this limitation and we’re at a point now where the current workaround solutions just cannot keep up. This is where using algebraic coding, which makes use of equations to represent data for efficiency, resilience and flexibility, comes in.

The core problem is this: how do we implement decentralized code for Web3?

A new memory infrastructure

This is why I took the leap from academia where I held the role of MIT NEC Chair and Professor of Software Science and Engineering to dedicate myself and a team of experts in advancing high-performance memory for Web3.

I saw something bigger: the potential to redefine how we think about computing in a decentralized world.

My team at Optimum is creating decentralized memory that works like a dedicated computer. Our approach is powered by Random Linear Network Coding (RLNC), a technology developed in my MIT lab over nearly two decades. It’s a proven data coding method that maximizes throughput and resilience in high-reliability networks from industrial systems to the internet.

Data coding is the process of converting information from one format to another for efficient storage, transmission or processing. Data coding has been around for decades and there are many iterations of it in use in networks today. RLNC is the modern approach to data coding built specifically for decentralized computing. This scheme transforms data into packets for transmission across a network of nodes, ensuring high speed and efficiency.

With multiple engineering awards from top global institutions, more than 80 patents, and numerous real-world deployments, RLNC is no longer just a theory. RLNC has garnered significant recognition, including the 2009 IEEE Communications Society and Information Theory Society Joint Paper Award for the work “A Random Linear Network Coding Approach to Multicast.” RLNC’s impact was acknowledged with the IEEE Koji Kobayashi Computers and Communications Award in 2022.

RLNC is now ready for decentralized systems, enabling faster data propagation, efficient storage, and real-time access, making it a key solution for Web3’s scalability and efficiency challenges.

Why this matters

Let’s take a step back. Why does all of this matter? Because we need memory for the world computer that’s not just decentralized but also efficient, scalable and reliable.

Currently, blockchains rely on best-effort, ad hoc solutions that achieve partially what memory in high-performance computing does. What they lack is a unified memory layer that encompasses both the memory bus for data propagation and the RAM for data storage and access.

The bus part of the computer should not become the bottleneck, as it does now. Let me explain.

“Gossip” is the common method for data propagation in blockchain networks. It is a peer-to-peer communication protocol in which nodes exchange information with random peers to spread data across the network. In its current implementation, it struggles at scale.

Imagine you need 10 pieces of information from neighbors who repeat what they’ve heard. As you speak to them, at first you get new information. But as you approach nine out of 10, the chance of hearing something new from a neighbor drops, making the final piece of information the hardest to get. Chances are 90% that the next thing you hear is something you already know.

This is how blockchain gossip works today — efficient early on, but redundant and slow when trying to complete the information sharing. You would have to be extremely lucky to get something new every time.

With RLNC, we get around the core scalability issue in current gossip. RLNC works as though you managed to get extremely lucky, so every time you hear info, it just happens to be info that is new to you. That means much greater throughput and much lower latency. This RLNC-powered gossip is our first product, which validators can implement through a simple API call to optimize data propagation for their nodes.

Let us now examine the memory part. It helps to think of memory as dynamic storage, like RAM in a computer or, for that matter, our closet. Decentralized RAM should mimic a closet; it should be structured, reliable, and consistent. A piece of data is either there or not, no half-bits, no missing sleeves. That’s atomicity. Items stay in the order they were placed — you might see an older version, but never a wrong one. That’s consistency. And, unless moved, everything stays put; data doesn’t disappear. That’s durability.

Instead of the closet, what do we have? Mempools are not something we keep around in computers, so why do we do that in Web3? The main reason is that there is not a proper memory layer. If we think of data management in blockchains as managing clothes in our closet, a mempool is like having a pile of laundry on the floor, where you are not sure what is in there and you need to rummage.

Current delays in transaction processing can be extremely high for any single chain. Citing Ethereum as an example, it takes two epochs or 12.8 minutes to finalize any single transaction. Without decentralized RAM, Web3 relies on mempools, where transactions sit until they’re processed, resulting in delays, congestion and unpredictability.

Full nodes store everything, bloating the system and making retrieval complex and costly. In computers, the RAM keeps what is currently needed, while less-used data moves to cold storage, maybe in the cloud or on disk. Full nodes are like a closet with all the clothes you ever wore (from everything you’ve ever worn as a baby until now).

This is not something we do on our computers, but they exist in Web3 because storage and read/write access aren’t optimized. With RLNC, we create decentralized RAM (deRAM) for timely, updateable state in a way that is economical, resilient and scalable.

DeRAM and data propagation powered by RLNC can solve Web3’s biggest bottlenecks by making memory faster, more efficient, and more scalable. It optimizes data propagation, reduces storage bloat, and enables real-time access without compromising decentralization. It’s long been a key missing piece in the world computer, but not for long.